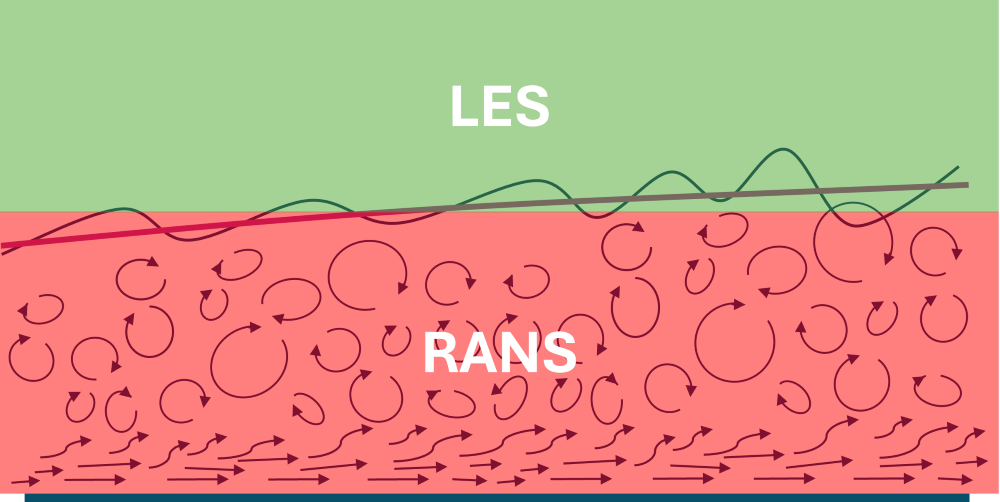

There is a big gap between classical RANS turbulence modelling and scale-resolving approaches like LES and DNS, both in terms of computational cost and what can be resolved. As a result, classical RANS modelling may be cheap but not always able to resolve the physics we want to capture, while LES and DNS may be able to resolve the physics correctly, but being too expensive to run.

This gap is addressed with advanced RANS modelling approaches, which we will review in this article. First, we will look at RANS models that can capture the transition process (classical RANS models assume the flow to be fully turbulent). This allows to capture the transition between laminar to turbulent flows, which is of vital importance if we are trying to reduce aerodynamic (skin friction) drag. We will look at the two most popular models and also what issues exist that hold transition-based RANS modelling back.

The second approach is concerned with hybrid RANS/LES formulation, where we attempt to mix RANS and LES in a clever way. We try to use RANS in regions where we don’t really need LES-like resolution to reduce the computational cost, while we use LES in areas where it is cheap to run while providing superior quality compared to classical RANS modelling.

We will look at Detached Eddy Simulations (DES), Delayed Detached Eddy Simulations (DDES), Improved Delayed Detached Eddy Simulations (IDDES), Scale-adaptive simulations (SAS), and wall-modelled Large Eddy Simulations (WMLES), which are all approaches that fall into the hybrid RANS/LES category. These represent the latest and greatest advances we have made in turbulence modelling in the last few decades, and they have become widely adopted in academic research and industrial applications alike.

If you have never heard about any of these approaches, or you are just vaguely familiar with them, then this article will give you a deep dive into these topics and elevate your understanding of advanced turbulence modelling. By the end of the article, you will know which approach to use for which situations and how these approaches differ, allowing you to select the most appropriate modelling strategy for your CFD simulations!

In this series

- Part 1: How to Derive the Navier-Stokes Equations: From start to end

- Part 2: What are Hyperbolic, parabolic, and elliptic equations in CFD?

- Part 3: How to discretise the Navier-Stokes equations

- Part 4: Explicit vs. Implicit time integration and the CFL condition

- Part 5: Space and time integration schemes for CFD applications

- Part 6: How to implement boundary conditions in CFD

- Part 7: How to solve incompressible and compressible flows in CFD

- Part 8: The origin of turbulence and Direct Numerical Simulations (DNS)

- Part 9: The introduction to Large Eddy Simulations (LES) I wish I had

- Part 10: All you need to know about RANS turbulence modelling in one article

- Part 11: Advanced RANS and hybrid RANS/LES turbulence modelling in CFD

In this article

- Introduction

- Going beyond fully turbulent: The transitional RANS models

- When RANS isn't enough: hybrid RANS-LES models

- Summary

Introduction

At this point, we have come quite far in our journey through turbulence modelling in CFD. We started with the origin of turbulence and how to model it using Direct Numerical Simulations. Then, we looked at Large Eddy Simulations, allowing us to reduce the computational cost while retaining most of the DNS resolution, and then at Reynolds averaged Navier-Stokes (RANS) turbulence modelling, which provides a statistical time-averaged sense of turbulence.

At this point, we could say that we have reviewed this matter quite exhaustively (and we have!), but that would be ignoring some breakthroughs in the field of what I will refer to as advanced RANS turbulence modelling. While RANS itself provides a blisteringly quick path to solution compared to LES and DNS, it achieves this with some serious limitations.

For starters, if you have ever done a simulation around an aerofoil, something that should be seemingly simple to set up and run, you will realise that no matter what you do, getting agreement between your predicted drag from CFD and experiments is really difficult to do. The reason is that aerofoils have some laminar flow over the wing, even at very high Reynolds numbers. Laminar flow produces very different viscous drag (due to a different velocity profile in the boundary layer) compared to turbulent flows.

The reason is that RANS models assume the flow to be fully turbulent, and classical RANS models had no mechanism to capture the transition from laminar to fully turbulent. There has been some development on that in the last decades, and it can significantly enhance the predictive power of RANS simulations. This does not come free, though. We typically have to refine our mesh quite substantially and spend more time on validation than we would with a classical RANS model (where no transition is captured).

There is a high uncertainty around transition modelling using RANS and for that reason it has not been applied in the mainstream CFD best practices, however, in cases where the location of the transition within the boundary layer is of importance, or resolving flow physics that require knowledge of laminar to turbulence transition (e.g. laminar separation bubbles), transitional RANS modelling may be an appealing cheaper option than full blown LES or DNS simulations (which are able to predict transition).

Secondly, there has been a very strong research effort on combining the accuracy of LES, especially away from walls, with the computational cheapness of RANS turbulence modelling near solid walls, to get LES-like results with (U) RANS-like computational costs. This approach is known as hybrid RANS-LES turbulence modelling, and you may be more familiar with the terminology Detached Eddy Simulations (DES), which is the most used implementation of this.

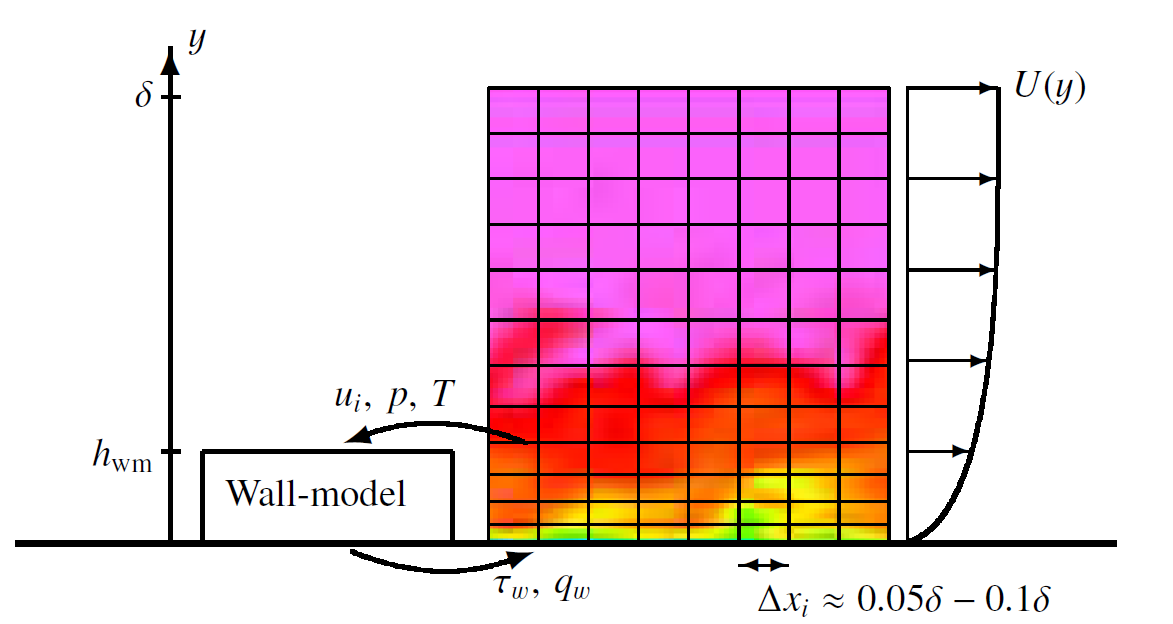

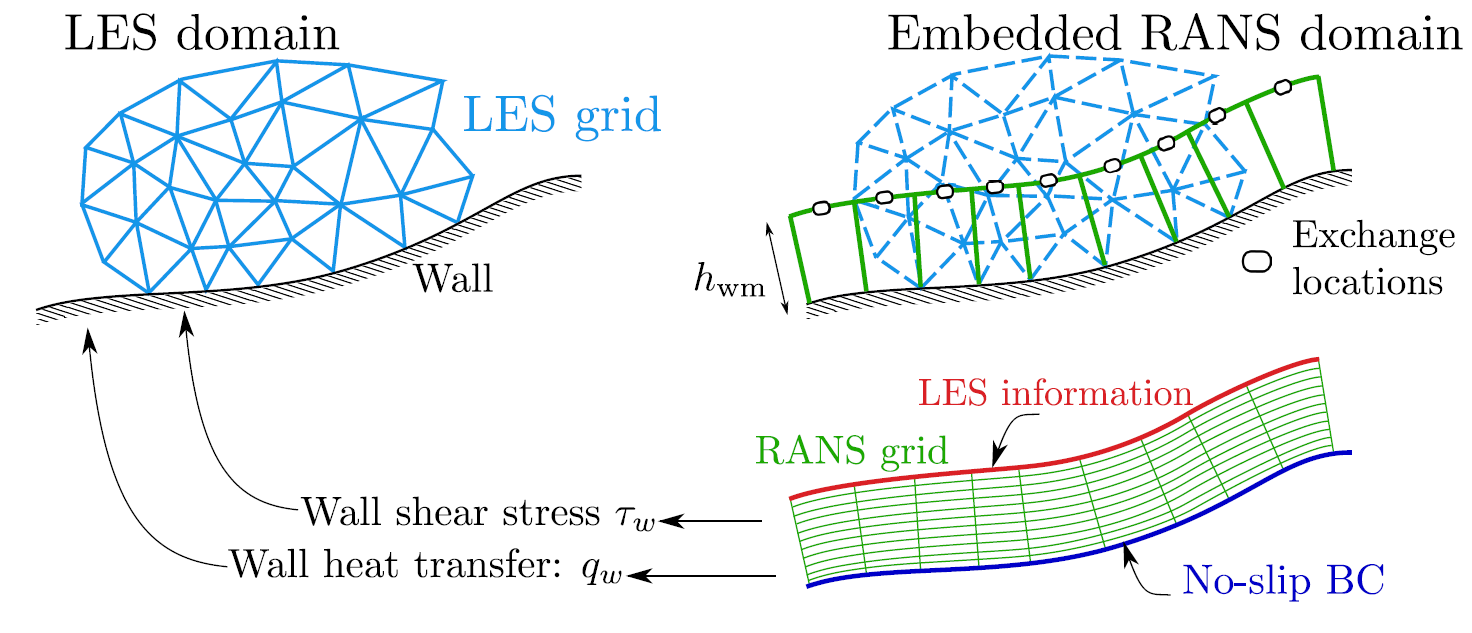

Finally, an alternative approach is to use wall-modelling within LES itself to reduce the meshing requirements near solid walls in LES, making computational costs more akin to (U)RANS simulations. This approach is known as wall-modelled LES (WMLES), and it is something that is attracting more and more attention as well.

Thus, in this article, we will be focusing on these developments to make sure we have covered these techniques as well. This will be our final article on turbulence modelling. Let us get started with transition modelling first in the next section.

Going beyond fully turbulent: The transitional RANS models

As alluded to above, having the option of resolving the transition from laminar to turbulent flows may be an appealing feature to have in RANS turbulence modelling. For aeronautical applications in particular, where we are concerned with streamlined aerodynamic surfaces (i.e., trying to reduce pressure drag as much as possible), we will create substantial laminar flow regions. Laminar flow produces smaller viscous drag, and so if we can maximise these laminar flow regions, we can reduce the drag of our aircraft.

Otto Aviation has proposed and developed a business jet that is making use of as many laminar flow-promoting surfaces as possible. Take a look at the prototype they have developed:

It is making use of NACA 6-series aerofoils, which were developed to promote as much laminar flow as possible. Even the fuselage is shaped in the form of a NACA 6-series aircraft. The propeller is mounted at the rear, pushing the aircraft rather than pulling it through the air (and as a result, flow over the wing and fuselage is largely unaffected by the propeller). There is an interesting discussion on the aerodynamic design of the Celera 500L over at Aviation Stackexchange, which you may want to check for a more detailed discussion.

If we are hired as an aerodynamicist at Otto Aviation and we are tasked with predicting lift and drag for the aircraft, we are in trouble. Classical RANS models, discussed in my previous article on RANS modelling assume the flow to be fully turbulent. As we have established, this will lead to incorrect results in the velocity profile in the boundary layer, and thus, drag predictions.

Thankfully, some turbulence model developers have taken on the burden of introducing a mechanism to distinguish between laminar and fully turbulent flows. As a result, the process of transition can be incorporated into classical RANS models.

In this section, we will look at the two most commonly used models and see how transition is incorporated into RANS modelling.

The Langtry-Menter transition model (\gamma-Re_{\theta,t},\,k-\omega SST)

Ah, we are talking about the first turbulence model, and I am already dropping Menter’s name again. Yes, that is right, Menter, together with Langtry, has given us one of the more popular RANS models that can be used to predict transition. Their model is weird, but from my experience produces the best results of the available transition models. Obviously, this is case-dependent, so your experience may differ.

While the model is weird, it has a certain magic about it, and it is unlike any other turbulence model we have looked at thus far. This is difficult to appreciate if you just look at the equations, so my aim here is to bring these equations to life so we can appreciate what Menter and Langtry came up with.

Let’s put ourselves in their shoes. When Langtry and Menter started their research, there was no serious RANS model available that could handle transition. So they had to invent their own model. Given the popularity of the k-\omega SST model, and the involvement of Menter, it isn’t difficult to see why they picked this model as the starting point. All they had to figure out was how this model could be modified to accommodate transition.

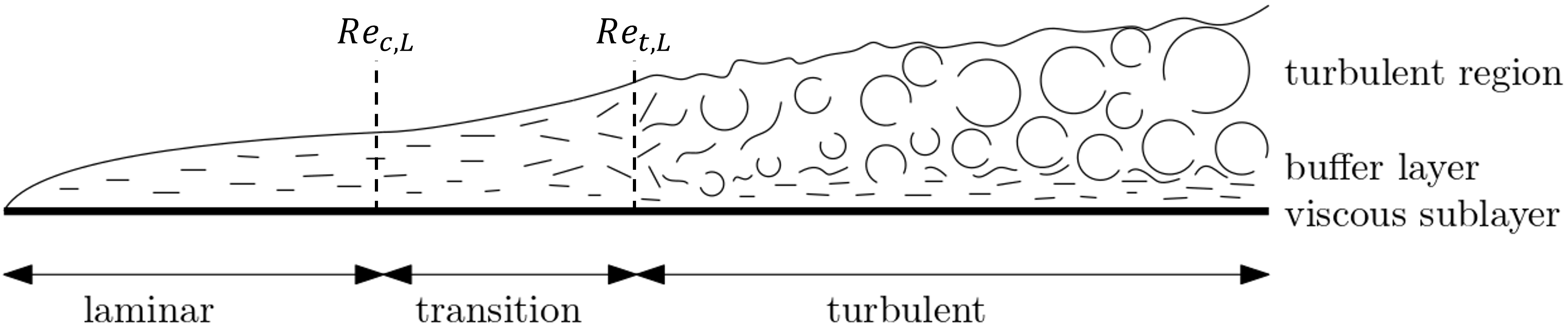

So, let’s look at transition again for a second. Let’s consider the flow over a flat plate and look at the boundary layer that forms. This is shown in the following image:

The boundary layer consists initially of a laminar flow region, which after some point transitions into an intermediate state, the transitional state, after which the flow becomes fully turbulent. There are two characteristic Reynolds numbers here, as shown in the image:

- Re_{c,L}: The critical Reynolds number, where the flow transitions from laminar into the transitional (intermediate) flow state

- Re_{t,L}: The Reynolds number at which the flow becomes fully turbulent.

We always have Re_{c,L} \lt Re_{t,L}. The reference length L is the point along the flat plate at which the flow transitions either into the transitional (i.e., Re_{c,L}) or fully turbulent state (i.e. Re_{t,L}). So how could we codify this into some form of RANS model?

Well, if you have followed my last article on classical RANS models, then you will know that if in doubt, we use a transport equation for an arbitrary quantity we believe represents the flow well.

Ok, so we can probably easily write down the transport equation, but what variables should we be solving for? Well, let’s return to the image above. Langtry and Menter argued that if we model for the critical Reynolds number, i.e., Re_{c,L}, then we know where on a solid surface flow should transition from laminar to turbulent flow. We can then combine this with a variable that indicates if the flow is fully laminar or turbulent, with a value of 0 and 1, respectively. A value between 0 and 1 would then indicate a transitional flow.

This is what they did, and if you are a keen observer, then you would likely find the fundamental flaw with this model immediately. Sure, if we know what Re_{c,L} is on solid walls, this will help us with transition. But what about away from walls? What is the physical significance of Re_{c,L} in the farfield? Well, there is none. We will later see how the transport equation for Re_{c,L} (which will get a different name later) addresses this issue.

So let us then return to the main idea of the Langtry-Menter transition model. We solve the transport equations that we have from the k-\omega SST RANS model, and then we supplement this with two additional transport equations. These solve for the following two properties:

- \gamma: The turbulent intermittency. It is set to 0 in laminar flows and 1 in turbulent flows. Transition can be resolved with \gamma values between 0 and 1.

- Re_{\theta,t}: This is the Reynolds number at which the flow transitions from laminar to turbulent flows. This is essentially the same as Re_{c,L}, with the difference that we use the momentum thickness \theta here instead of some downstream location on a wall, i.e. L. We will see in a bit why this is beneficial.

Let’s look at the intermittency in more detail. It is defined as:

\gamma = \frac{t_{turbulent}}{t_{laminar} + t_{turbulent}}\tag{1}Here, t_{laminar} and t_{turbulent} are durations for which the flow has been observed to be either laminar or turbulent. For example, if I am in the farfield and there is no turbulence and I measure the flow for a total of 10 seconds, then I likely find that t_{laminar}=10s and t_{turbulent}=0s. Thus, inserting this into the definition above, we get \gamma = (0)/(10 + 0)= 0/10 = 0. Therefore, the flow is fully laminar.

If we are somewhere in the turbulent part of a boundary layer or wake, then we would get, for example, t_{laminar}=0s and t_{turbulent}=10s for the same sampling window of 10 seconds. This would result in \gamma=(10)/(0+10)=10/10=1. Thus, the flow is fully turbulent.

However, in the transition region, we may have the generation of some turbulent spots that will decay and turn into laminar flow again. In this case, we

may have, for example, t_{laminar}=8 and t_{turbulent}=2s, which results in \gamma=(2)/(8 + 2)= 2/10 = 0.2, for the same 10 second sampling period.

Take a look at the following video, which visualises these turbulent spots in the transition region:

Thus, \gamma can be used to determine where we are in the flow (laminar, transitional, or turbulent flow). It is assumed to be zero at solid walls until Re_{\theta,t} is larger than the critical value. At this point, we transition into the transition region and \gamma is allowed to grow until it reaches a value of 1. We will see later how this is achieved by the turbulence model.

OK, this is the main idea behind the model, let’s look at some of the equations that go into the model. At the beginning of this section, I mentioned the critical Reynolds number Re_{c,L}, which is the point along a solid wall where the flow transitions to turbulence. But this isn’t a good Reynolds number to begin with.

This Reynolds number is based on some reference length L downstream of the plate at which transition occurs. This will depend on freestream conditions, as well as the geometry itself. For example, a flat plate may transition later to turbulence than an airfoil, even if the freestream parameters are different (simply because there is curvature on the airfoil). Thus, the value of L is somewhat arbitrary, and if our goal is to create a robust model, we would prefer not to have a dependence on L.

Instead, we may want to reformulate the Reynolds number not in terms of a reference length L but instead based on the momentum thickness \theta. This is defined as:

\theta = \int_0^\delta \frac{u(y)}{U_\infty}\left(1-\frac{u(y)}{U_\infty}\right)\mathrm{d}y

\tag{2}

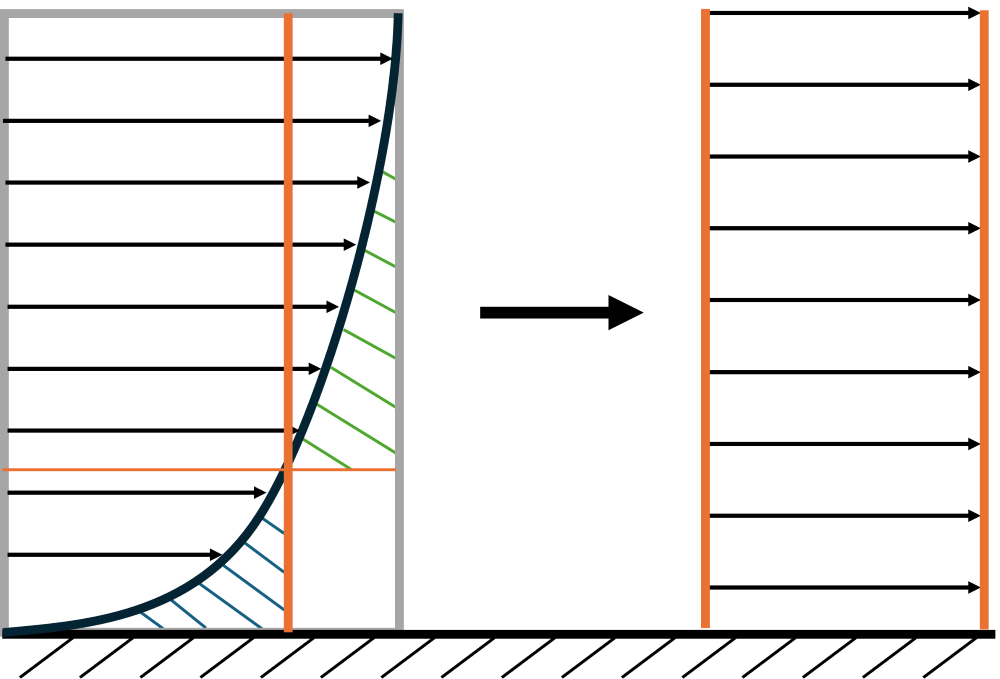

What does this physically represent? Well, If we integrate along the entire boundary layer profile, we would get a certain momentum. Imagine we want to replace this velocity profile now with a flat velocity profile, but one that has the same integrated momentum. The momentum thickness tells us the height within a boundary layer at which we could replace the velocity profile with a constant velocity profile. We take the velocity at the height of the momentum thickness from the real velocity profile.

This sounds a bit convoluted, so let’s look at this in a sketch:

Here, the velocity profile on the right is constant across the boundary layer, whereas the velocity profile on the left (the real velocity profile) has a quadratic or power law shape (depending on the flow regime). Both velocity profiles have the same momentum (\int\rho u(y) \mathrm{d}y).

We see that we can also graphically determine the momentum thickness by placing the entire velocity profile inside a rectangle. We then seek to find a line (shown in orange) that cuts the velocity profile at a point where the green and blue shaded areas are equal.

Ok, so now we’ve got rid of the arbitrary reference length L, job done, right? Well, let’s look at Eq.(2) once more. Notice how we use the definition of the boundary layer thickness to determine the integration bounds, i.e. we integrate from 0 to \delta, where \delta is defined as the point on the velocity profile where we have reached 99% freestream velocity.

This may work for a flat plate, but what if we investigate wake flows? For example, we may want to study the aerodynamic efficiency of a vehicle following another. The second vehicle will be in the turbulent wake of the other. So, the question then becomes, what is \delta here? The second vehicle will experience a mixture of its own turbulent boundary layer and the wake from the leading car. The momentum thickness isn’t clearly defined in this case.

Since we want to write a RANS turbulence model that is robust and can be used for different types of flow, we need to get rid of the \delta dependence. As luck would have it, there is a Reynolds number we can define solely on local flow quantities, which has a strong correlation to the Reynolds number based on the momentum thickness. This is the Reynolds number based on the local vorticity. This is given as:

Re_v=\frac{\rho d^2}{\mu}\bigg|\frac{\mathrm{d}u}{\mathrm{d}y}\bigg|

\tag{3}

Here, we use the velocity gradient near the solid wall. Since a velocity gradient has units of 1/s, we need to square our reference length, which in this case is the distance away from the wall, i.e. d. This definition assumes that we are looking at a flat plate with the y direction in the wall normal direction and the velocity u flowing along the wall. We can mentally replace that by the magnitude of the strain-rate tensor, as we have done so often in previous articles, to generalise this to arbitrary curved geometries.

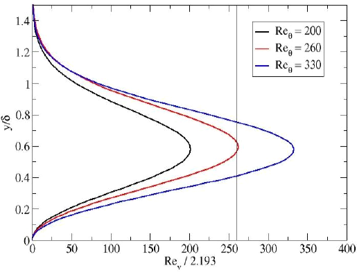

Let’s plot this vorticity-based Reynolds number along the wall normal direction. This is shown in the following figure:

We can see the general shape of the Reynolds number throughout the boundary layer height. This profile is seemingly divided by this magic number 2.193 on the x-axis, why is that? Well, if we look at what the corresponding Reynolds number is, now expressed in terms of the momentum thickness, then we see that scaling the vorticity-based Reynolds number results in its max value to be equivalent to the critical momentum thickness-based Reynolds number. In other words, we have the following functional relationship:

Re_{\theta,t}=\frac{Re_{v,max}}{2.193}\tag{4}The critical momentum thickness-based Reynolds number, referred to from now on as Re_{\theta,t}, is the point at which the flow transitions from laminar to turbulent flow. Thus, based on local properties, such as the velocity gradient tensor, we can calculate what this transition point will be. We can use this and define a function F_{onset} that will be 0 in regions where the flow is fully laminar, and greater than zero in regions where transition to turbulence should happen.

This function is given as:

F_{onset}\propto\max\left(\frac{Re_{v,max}}{2.193Re_{\theta,t}}-1, 0\right)\tag{5}We have to subtract 1 from the first argument in the \max() statement to ensure that the value is negative in regions where we have laminar flows. The value of F_{onset} is clipped at 0 so it will never become negative.

Thus, Re_{v,max} is something we can determine from local flow properties at the wall, but what about Re_{\theta,t}? The first question we need to ask ourselves is what this value should depend on? If you look at my discussion on the mechanisms that trigger transition, one such parameter is the turbulent intensity.

This parameter is defined as the fluctuation of the velocity field with respect to the freestream (farfield) velocity. This can be expressed as:

Tu=\frac{u'}{U_\infty}\tag{6}If we wanted to be a clever dick (of course, we want to be, after all, we talk about turbulence modelling which features the cream de la creme of clever dicks), we could expand the velocity fluctuations as:

u' = \frac{u'+v'+w'}{3} = \sqrt{\frac{u'u'+v'v'+w'w'}{3}}=\sqrt{\frac{2}{3}k}\tag{7}Here, k is the turbulent kinetic energy, defined as k=0.5(u'u'+v'v'+w'w'). The above assumes that the fluctuations are largely the same in each direction. Well, I said clever dick, but in the end, this expansion is a necessary one, as we do not have information on u', v', or w' in RANS simulations. We do, however, typically know what the turbulent kinetic energy is, and so we can compute the turbulent intensity Tu using the turbulent kinetic energy as:

Tu=\frac{\sqrt{\frac{2}{3}k}}{U_\infty}\tag{8}Doing some correlation work in the wind tunnel, for simple boundary layer and flat plate cases, we can determine the relationship between the turbulent intensity and the critical Reynolds number at which the flow transitions as:

Re_{\theta,t}=

\begin{cases}

1173.51 - 589.428\cdot Tu \cdot 100 + \frac{0.2196}{(Tu\cdot 100)^2} &Tu \le 1.3\%\\[1em]

\frac{331.5}{(Tu\cdot 100 - 0.5658)^{0.671}} &Tu \gt 1.3\%

\end{cases}

\tag{9}

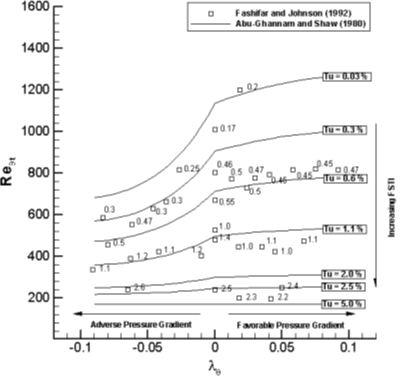

More advanced formulations are available that also take the pressure gradient into account, which is then expressed as:

\lambda_\theta = \frac{\theta^2}{\mu}\frac{1}{U_\infty}\frac{\mathrm{d}p}{\mathrm{d}x}\tag{10}For example, Abu-Ghannam and Shaw proposed the following relationship between the critical Reynolds number, the pressure gradient, and the turbulence intensity:

Whichever approach we choose, we need to keep in mind that all these relations make some limiting assumptions that will influence our simulations. While it may be appropriate to determine the critical Reynolds number from the pressure gradient and turbulent intensity for a large number of applications, this may fail to give satisfactory answers for other cases.

For example, looking at the mechanisms that trigger transition again, one such mechanism is cross-flow induced transition. This is not dependent on the pressure gradient (the farfield for aeronautical applications has a constant pressure and thus no gradient), and this transition would still occur with very low turbulent intensities. Clearly, other parameters, related to swirling motions, play a role here which are neglected in these empirical correlations.

But let’s say we are happy that these correlations cover our use case. Then, we know what the critical Reynolds number ought to be from our empirical correlations, and we need to establish the transition length so we know when the flow is fully turbulent. This is done by the F_{length} function, although it does not necessarily provide a length in metres that is equivalent to the real transition length. If anything, it is proportional to the transition length.

This transition length takes a few parameters, the first being a function that calculates the F_{length}^* parameter based on the critical Reynolds number:

F_{length}^*=

\begin{cases}

-119.27\cdot 10^{-4} Re_{\theta,t} - 132.567\cdot 10^{-6} \sqrt{Re_{\theta,t}} + 398.189\cdot 10^{-1} &0 \le Re_{\theta,t} \lt 400 \\[1em]

-101.695 \cdot 10^{-8}Re_{\theta,t}^3 -123.939 \cdot 10^{-2}Re_{\theta,t} + 194.548\cdot 10^{-5} \sqrt{Re_{\theta,t}} + 263.404 &400\le Re_{\theta,t} \lt 596 \\[1em]

-3\cdot 10^{-4}Re_{\theta,t} + 0.6788 &596 \lt Re_{\theta,t} \le 1200\\[1em]

0.3188 &Re_{\theta,t} \ge 1200

\end{cases}\tag{11}We need two additional expressions that scale the transition length, which are characteristic of the viscous sublayer. These are given as:

F_{sub}=\exp\left[-\left(\frac{Re_\omega}{200}\right)^2\right]\\[1em]

Re_\omega=\frac{\omega d^2}{\nu}\tag{12}With this, we are able to compute the transition length that is proportional to the physical transition length but not necessarily equivalent. This is given as:

F_{length}=F_{length}^*(1-F_{sub})+ 40F_{sub}\tag{13}Let’s take stock of what we have achieved thus far. We have identified a mechanism to compute the local Reynolds number based on the momentum thickness using only local parameters. This allows us to compute the Reynolds number without having to specify a reference length L, and it also allows us to compute a Reynolds number for arbitrary flows.

Now we need to combine this with the transport equations for \gamma and Re_{\theta,t}. Let’s start with \gamma. The transport equation is given as:

\frac{\partial \gamma}{\partial t} + (U\cdot \nabla)\gamma = \nabla\cdot\left[\left(\nu+\frac{\nu_t}{\sigma_\gamma}\right)\nabla\gamma\right] + P_{\gamma 1} - D_{\gamma 1} + P_{\gamma 2} - D_{\gamma 2}

\tag{14}

If you are missing an intuition for what a transport equation looks like, I’d recommend going through my previous article on classical RANS modelling. It will take some time to read, but it is time well spent.

We have our usual terms here for the change in time, advection in space, and diffusion in time. What is different here is that we have two separate production and destruction terms. Typically, RANS models only feature a single production and destruction term, but not this model. Why? Well, \gamma is an indicator of whether the flow is turbulent or not, and there are different mechanisms that can trigger turbulence. Thus, we need more than just a single pair of production and destruction terms.

Let’s look at the first pair, which is the transition onset. We can think of this as the process that models natural transition. Once we have reached a critical Reynolds number, the flow will naturally transition to turbulence. The first production term is given as:

P_{\gamma 1} = 2F_{length}\sqrt{2\mathbf{S}:\mathbf{S}}(\gamma F_{onset})^2\tag{15}We saw that F_{onset} will become larger than 0 once we are in the transitional flow regime; otherwise, it will be zero for laminar flows, and so the production term will also be zero. It is also scaled by the F_{length} function, meaning a larger transition length will create turbulence faster (higher production of \gamma). Finally, we multiply by the magnitude of the strain-rate tensor, meaning that the strength of the mean flow gradients will also scale the production term. Stronger gradients will result in more production of \gamma and thus faster transition to turbulence.

The destruction term also has some interesting features. It is given as:

D_{\gamma 1}= \gamma P_{\gamma 1}\tag{16}We simply scale it by the production term. Let’s think about some conditions that can happen:

- If the flow is laminar, then we have \gamma = 0 and so the destruction term must be zero as well.

- For fully turbulent flows, we have \gamma = 1.0 and so production and dissipation are exactly match. This means any \gamma that is created will immediately destroyed, creating an equilibrium condition for fully turbulent flows.

- Only in cases where the flow is between laminar and turbulent, i.e. 0 \lt \gamma \lt 1, we have more production than destruction, since the destruction term is scaled by \gamma. By definition, it must therefore be smaller than the production term. Thus, \gamma can only be created in the transition region. Once it reaches a value of 1, it will stay at 1.

The second pair of production/destruction relates to relaminarisation. Flow can locally become turbulent but then may experience not sufficient energy to sustain that turbulent motion, and the flow will become laminar again. The second production term will be able to capture this behaviour, which allows this model to capture/model laminar separation bubbles, where this phenomenon occurs.

Given that Menter has an extensive history with NASA, which in turn are quite keenly interested in aerospace applications, it is not difficult to see why this second term was included. The production term is given as:

P_{\gamma 2}=c_{a2}\Omega d F_{turb}\tag{17}with the corresponding destruction term

E_{\gamma 2} = c_{e2}\gamma P_{gamma 2}\tag{18}Here, we need to define the additional functional relationship:

F_{turb}= e^{-\frac{k}{4\nu\omega}}\tag{19}So what have we gained here? \Omega is the vorticity tensor and \omega is the specific dissipation rate, which is only non-zero close to solid walls. In the farfield, \omega is close to zero, making F_{turb} go to zero in the farfield but to non-zero values near solid walls (e.g. where laminar separation bubbles would be located). We use the same trick here with the destruction term, i.e. it is scaled by the production term and \gamma.

Let’s talk about the elephant in the room, then. I have left the best for last, and by that, I mean the critical Reynolds number Re_{\theta,t}. The transport equation for it is given as:

\frac{\partial Re_{\theta,t}}{\partial t} + (\mathbf{u}\cdot \nabla)Re_{\theta,t} = \nabla\cdot\left[\sigma_{\theta,t}\left(\nu+\nu_t\right)\nabla Re_{\theta,t}\right] + P_{\theta t}

\tag{20}

Let’s just appreciate how ridiculous this is. The critical Reynolds number Re_{\theta,t} is the Reynolds number which determines where the flow will transition from laminar to turbulent at solid walls. What is the meaning of Re_{\theta,t} in the farfield? There is none! But, we are solving a transport equation, so clearly we will have a value for Re_{\theta,t} everywhere in the domain. Is this a problem?

Well, from a physical point of view, yes, having information about Re_{\theta,t} in the farfield is pointless and of no use. However, from an engineering point of view, we accept that we break our physical understanding of the world every now and then if it suits us (and the results we are getting are not too bad). This is what we are doing here as well.

The transport equation has been designed with some clever mechanisms that allow us to use it and to get good results despite the oddity of using a transport equation for a value that is only required near solid walls.

The way that we make the transport equation for Re_{\theta,t} work for us is in two steps:

- First, we impose the critical Reynolds number as a Dirichlet boundary condition at the inlet. We may know this value from experiments, or we can use an empirical correlation such as given by Eq.(9).

- Second, we enforce this value to be constant in the farfield. Only close to solid walls do we allow it to change so that ti can adjust to local changes in the geometry, e.g. curvature of the wall.

Let’s see how this is achieved through the transport equation given by Eq.(20). We have our usual 3 terms that we have seen in any other transport equation as well. These are responsible for time changes in Re_{\theta,t}, the convection of Re_{\theta,t} from one place to another, as well as the diffusion of Re_{\theta,t} in space. But notice what else is given by Eq.(20), or rather what is absent.

We have a single production term P_{\theta t} but no corresponding destruction term. This is at odds with how we typically construct our transport equations for RANS turbulence models. We usually require that a quantity can be produced or destroyed, but not in this case. So let us look at the production term in more detail. It is given as:

P_{\theta t} = \frac{c_{\theta t}}{\tau}\left(\overline{Re_{\theta t}} - Re_{\theta t}\right)\left(1-F_{\theta t}\right)

\tag{21}

Let’s start at the back. We have a quantity F_{\theta t}, which is given by:

F_{\theta t}=

\begin{cases}

1 & \text{laminar Boundary Layer}\\[1em]

0 & \text{freestream}

\end{cases}\tag{22}From the turbulent intermittency \gamma, we know that we are in a laminar boundary layer if we have \gamma=0. Therefore, we can determine the value of this function. If we are in a laminar boundary layer, the term inside the last brackets reads \left(1-F_{\theta t}\right)=1-1=0. Therefore, the entire equation is multiplied by 0, making the production term P_{\theta t}=0 in laminar boundary layers. If we are in the farfield, however, this is then switched to zero, so that the production term can be non-zero.

Now, let’s examine the first part of the production term. We have a closure coefficient c_{\theta t} that is constant, as well as a timescale \tau, that is given by:

\tau=\frac{500\nu}{u^2}\tag{23}Similar to how the Reynolds number is defined, we have here a ratio of the viscous (\nu) to the inviscid (u) forces, multiplied by some arbitrary factor. Thus, you can think of this timescale for how long it would take the flow to return to a state of equilibrium. Here is a simple (mental) experiment you can perform (or go splurging on supplies, this is an experiment you can do at home with the kids, why not …).

Take two equal containers, and fill one of them with water and one with honey. Run your finger through both with the same velocity. Which of these will return to an equilibrium state first? I.e., which of these will go back to a state where no flow is detected in the container? Clearly, honey will return quickly to this state, owing to its high viscosity. Looking at the definition of \tau, we could therefore state, as long as u_{honey}=u_{water}, that \tau_{water}\lt \tau_{honey}.

A higher value of \tau will reduce the time it takes for the flow to return to its equilibrium state, as it is given in the denominator of Eq.(21).

And then, we have the term \overline{Re_{\theta t}} - Re_{\theta t}. Here, \overline{Re_{\theta t}} is the value for Re_{\theta t} that we impose at the inlet, i.e. the value for which we know the flow to transition to turbulence. Re_{\theta t} is the value that is obtained from our transport equation (the literature also sometimes uses the reverse definition, i.e. Re_{\theta t} being the value set at the inlet, and \overline{Re_{\theta t}} solved for by the transport equation).

Thus, if the value of Re_{\theta t} we have solved for is the same as the one imposed at the inlet, the difference \overline{Re_{\theta t}} - Re_{\theta t} must be zero. Therefore, the production term is zero. However, if we have \overline{Re_{\theta t}} \gt Re_{\theta t}, then the computed value for Re_{\theta t} is smaller than \overline{Re_{\theta t}} and so their difference \overline{Re_{\theta t}} - Re_{\theta t} is positive. If \overline{Re_{\theta t}} \lt Re_{\theta t}, then Re_{\theta t} is greater than \overline{Re_{\theta t}}. The difference \overline{Re_{\theta t}} - Re_{\theta t} is now negative.

This, the production term, really, is a hybrid production/destruction term. Depending on how much (or little) of Re_{\theta t} we have produced, we will either add or subtract this difference from the transport equation of Re_{\theta t}. We multiply this difference by the relaxation time scale \tau (or rather, its inverse) to allow fluids to reach equilibrium at different times, proportional to their viscosity. Furthermore, the production term is completely switched off in laminar flow boundary layers.

The second secret of the Re_{\theta t} transport equation is the diffusion itself. We saw in Eq.(21) that the diffusive term is multiplied by \sigma_{\theta,t}, another closure coefficient. The value for \sigma_{\theta,t}, as well as other closure coefficients typically used for the diffusive term in other RANS models, is shown below:

- \sigma_{\theta,t} = 10 (current model, i.e. \gamma-Re_{\theta,t},\,k-\omega SST model)

- \sigma_k = 0.6 (k-\omega model)

- \sigma_\omega = 0.5 (k-\omega model)

- \sigma_{SA} = 1.5 (Spalart-Allmaras model)

As we can see, a value of \sigma_{\theta,t} = 10 is much larger (about one magnitude) than any other diffusive closure coefficients taken from other models. While some model improvements were proposed over the years where \sigma_{\theta,t} has changed, this value shows the importance diffusion plays near solid boundaries.

That is, the flow is advected from the inlet towards solid boundaries, e.g. an airfoil. On its way from the inlet to the solid boundaries, the production term ensures that Re_{\theta t} remains constant. Closer to the solid surfaces (e.g. airfoil), the transport equation for Re_{\theta t} diffuses its value that it got from the inlet around the solid boundary. This process is highlighted for the flow around an airfoil, where we see contours for Re_{\theta t}, both for the farfield and a close-up look near the airfoil:

The inlet condition gets advected close to the airfoil, and then diffusion is taking over, wrapping the values for Re_{\theta t} around the arifoil, like a vacuum pump. In this way, we are able to wrap the airfoil (solid walls) with values for Re_{\theta t} that were imposed at the inlet, which are allowed to locally change based on the geometry’s curvature.

I told you, it is a weird model, but it does work quite well, at least for simple test cases. I haven’t really used it for anything beyond airfoil simulations, and there are reasons why you may not either. I will pick up these issues when we talk about the meshing requirements for transitional RANS models.

Having established now the two additional transport equations we need to solve in the \gamma-Re_{\theta,t},\,k-\omega SST model, it is time to combine these results with the standard k-\omega SST model. The standard \omega equation from the k-\omega SST model is used and repeated below for the sake of completeness:

\frac{\partial \omega}{\partial t} + \left(\mathbf{u}\cdot\nabla\right)\omega =

\nabla\cdot\left[\left(\nu+\sigma_\omega\nu_t\right)\nabla \omega\right] + (1-F_1)\sigma_{\omega2}\frac{2}{\omega}\nabla \omega\nabla k +

\frac{\gamma}{\nu_t}P - \beta\omega^2

\tag{24}

However, the transport equation for the turbulent kinetic energy k is modified as shown below:

\frac{\partial k}{\partial t} + (\mathbf{u}\cdot\nabla)k = \nabla\cdot\left[\left(\nu+\sigma^*\nu_t\right)\nabla k\right] + \hat{P}_k - \hat{D}_k

\tag{25}

Here, we are modifying the production and destruction terms. The production term is given as:

\hat{P}_k=\gamma P_k \\[1em]

P_k = \tau^{RANS}:\nabla\mathbf{u}

\tag{26}

Here, we simply multiply the production of turbulent kinetic energy by the value of \gamma. Intuitively speaking, this makes sense. If there is no turbulence, we can produce any, and so where \gamma is zero, the production of turbulent kinetic energy must be zero as well. Turbulence is only generated in the transition regime, i.e. where 0 \gt \gamma \gt 1. The production term does satisfy this requirement. How about the destruction term? Well, it is given as:

\hat{D}_k = \min\left(\max\left(\gamma, 0.1\right), 1.0\right)D_k \\[1em]

D_k = \beta^* k\omega\tag{27}This is another weird property of the \gamma-Re_{\theta,t},\,k-\omega SST model. As we can see, the lowest value for the destruction that can be obtained is 0.1D_k. The highest is 1.0 D_k. While the highest value makes sense, i.e. this is the same as the destruction term in the original k-\omega SST model, the lowest value does not. Regardless of the flow, there will always be some destruction.

As we have explored in my article on numerical dissipation, we saw that we always need some form of dissipation to stabilise the flow. This could be either due to the fact that we ignored some of the small-scale dissipative effects (due to using a coarse grid), or it could be to dampen the non-linearities in the flow that only get exacerbated by numerical round-off effects. Thus, some dissipation may reduce accuracy, but it will also promote stability of the numerical procedure.

And this is the \gamma-Re_{\theta,t},\,k-\omega SST model in a nutshell. We solve two additional transport equations for the turbulent intermittency \gamma and the critical Reynolds number at which flow ought to transition, i.e. Re_{\theta,t}. With these two additional variables, we know where the flow is locally laminar, where transition takes place, and where the fully turbulent boundary layer is reached. As a result, we hope to get more accurate flow predictions near solid walls, mainly skin friction drag and separation points.

As we have seen, there were some oddities with this model. We impose the critical Reynolds number at the inlet and then hope to advect it close to solid walls. There were a lot of engineering assumptions at play here. Perhaps your background is in physics, or maths, in which case this model may feel more like an engineer went rogue with the laws of physics, but fear not, the CFD community has a model just for you, which we will look at in the next section.

The k - k_l - \omega model

I personally really like the k-k_l-\omega model. I really do. Perhaps this is coming from the fact that the model we looked at in the previous section was my first introduction to transition models. It felt weird, and to this day, whenever I use the \gamma-Re_{\theta,t},\,k-\omega SST model, something just doesn’t feel right.

The k-k_l-\omega model, on the other hand, is firmly rooted in physics. The model attempts to capture transition by modelling the physical process of transition, and all additional terms in the equation can be attributed to a real physical phenomenon that we can observe and measure in real life. So then, what is this more physically inspired transitional model?

The main idea behind the k-k_l-\omega model is that we have two separate kinetic energies:

- k, or k_T: The turbulent kinetic energy per unit mass, formed by the velocity fluctuations, i.e. k=k_T=0.5(u'<sup>2 + v'</sup>2 + w'^2)

- k_l: The laminar kinetic energy per unit mass, formed by the instantaneous velocities k_l=0.5(u<sup>2 + v</sup>2 + w^2)

In a laminar flow, we have k_T=0 and k_l\gt 0, for a turbulent flow, we have k_T\gt 0 and k_l = 0, and then for any intermittent flow we have k_T\gt 0 and k_l\gt 0. We can also express the total kinetic energy, k_{TOT}, as k_{TOT} = k_l + k_T. Fun fact, the word TOT translates to death from German to English. I like to think of k_{TOT} to be the lethal or deadly kinetic energy (but I doubt you will find any textbook adopting this terminology anytime soon!).

So, we have two separate kinetic energies. Their sum is the total kinetic energy. The idea is that we model two separate transport equations for both the laminar and turbulent kinetic energy, and so based on the kinetic energy we have, we can distinguish between laminar and turbulent flows. For example, if we have a laminar boundary layer, we could assert this by computing the ratio k_T/k_l. Since there is no turbulent kinetic energy, k_T=0, and the ratio k_T/k_l becomes zero. This is then similar to the quantity \gamma, where a value of \gamma=0 indicates laminar flow.

The reverse, however, is a bit trickier. For a fully turbulent flow, where k_l=0, the ratio k_T/k_l becomes infinity. So we can’t directly compute an equivalent quantity like \gamma with this model, but we can see that we certainly can differentiate between the different regions.

The main question we have to ask ourselves is, how do we model the transfer from laminar to turbulent kinetic energy? Well, let’s have a look at the transport equations for both k_T and k_l. For the turbulent kinetic energy k_T, we have:

\frac{\partial k_T}{\partial t} + (\mathbf{u}\cdot \nabla)k_T = \nabla\cdot\left[\left(\nu+\frac{\alpha_T}{\sigma_k}\right)\nabla k_T\right] + P_{k_T} - \omega k_T - D_T + R_{BP} + R_{NAT}

\tag{28}

And, for the laminar kinetic energy k_l, we have:

\frac{\partial k_l}{\partial t} + (\mathbf{u}\cdot \nabla)k_l = \nabla\cdot\left[\nu\nabla k_l\right] + P_{k_l} - D_T - R_{BP} - R_{NAT}

\tag{29}

Both equations have the standard time derivative, convection, and diffusive terms, as we have seen so many times with other RANS models based on transport equations. However, there are two nuances here:

- The diffusion term in the turbulent kinetic energy contains an effective diffusivity \alpha_T, instead of directly using the turbulent viscosity \nu_T here. This allows the model to have a more refined approach to computing the turbulent viscosity.

- The laminar kinetic energy only depends on, well, the laminar (or physical) viscosity \nu.

We then see the usual suspects, i.e. both equations feature a construction term, as well as an additional term D_T, that is akin to the cross-diffusion term in the k-\omega SST term. While the turbulent kinetic energy k_T features a destruction term, i.e. -\omega k_T, there is no destruction term in the laminar kinetic energy equation for k_l.

If we think about this, this makes sense. Laminar kinetic energy isn’t destroyed, but rather, it is transferred into turbulent kinetic energy. We do have an explicit equation to solve for this, and therefore, we do not need to add a source term to balance the equation, but instead, transfer laminar kinetic energy to the turbulent kinetic energy transport equation, instead of destroying it through a source term.

The turbulent kinetic energy equation, however, does require a source term, as its energy gets eventually converted into heat at the smallest scales, and we do not resolve this explicitly. Instead, we model this dissipative behaviour through the source term. But, if we wanted to, we could create a new transport equation, model the heat dissipation at the smallest scales (which would be challenging since we do not resolve the smallest scales), and transfer any heat dissipation at the smallest scales to this transport equation. In this case, we could get rid of the source term in the k_T equation.

So, how is the energy transfer from the laminar to the turbulent kinetic energy? This is done by the two additional terms R_{BP} and R_{NAT}, which are introduced to model bypass transition and natural transition. Notice how these terms are negative in the k_l equation but positive in the k_T equation.

Thus, if the model detects that bypass or natural transition occurs at the moment, it will reduce the amount of laminar kinetic energy k_l (negative contributions of R_{BP} and R_{NAT}) and add this to the turbulent kinetic energy. This provides a clean mechanism to transfer kinetic energy from one form to the other. Since this transfer appears with the same magnitude but opposite sign, we conserve the total kinetic energy k_{TOT}.

So naturally, the next question we must ask ourselves is, what are these additional source terms? Let us start our discussion on R_{BP}. Walter and Cokljat, the authors of this model, argue that transition starts when turbulence starts growing faster than viscosity can smooth it out.

If we look at turbulence production in RANS equations, we saw previously that the production of turbulence is typically linked to the velocity gradient, specifically, the strain-rate tensor. The strain rate tensor \mathbf{S} has units of 1/s, so a sensible turbulence time scale could be expressed as t_{turbulent}\propto 1/\mathbf{S}. Similarly, the viscous dissipation time scale can be computed based on the viscosity and a length scale (e.g. the eddy size) as t_{viscous}\propto l^2/\nu, purely on dimensional grounds.

Thus, if t_{turbulence}\gt t_{viscous}, then turbulence generation is stronger than the viscous dissipation, and the flow starts to transition to turbulence.

As an analogy, imagine you are trying to light up a barbecue. You have your coals, a source of heat (a lighter), and the environment (potentially some wind gusts). You are trying to transition the coals from a non-lit to a lit state, by exposing the coal to heat. Initially, there will be some resistance by the coal being lit (similar to viscous dissipation).

The heat from your lighter may not be enough to turn the coals on. But, you may either have a favourable gust of wind (or average wind), or you can use blowing to add oxygen yourself. The influx of additional oxygen will energise the heat source and eventually overcome the resistance of the coal to turn on. Similarly, once a sufficient amount of turbulence is being produced, it will overcome the viscous forces and the flow will transition to turbulence.

This process is modelled by the R_{BP} and R_{NAT} source terms, which, as mentioned previously, will switch on either bypass or natural transitions. The R_{BP} transition is modelled as:

R_{BP}=C_R \beta_{BP} k_L \frac{\omega}{f_W}

\tag{30}

Here, \beta_{BP} is the term responsible for checking if the turbulent characteristic timescale has become smaller than the viscous characteristic timescale. It is given by:

\beta_{BP} = 1 - \exp\left(-\frac{\phi_{BP}}{A_{BP}}\right)

\tag{31}

From this expression, we cannot appreciate this fully, but instead, we need to look at the definition of \phi_{BP}, which in turn is given as:

\phi_{BP}=\max\left[\left(\frac{k_T}{\nu\Omega} - C_{BP,crit}, 0\right)\right]\tag{32}Let’s look at the first term within the \max() statement. Here, we see the turbulent kinetic energy k_T, with units of m^2/s^2. This is divided by the product of \nu\Omega. \nu is the laminar viscosity in units of m^2/s, and \Omega is the vorticity tensor, with units of 1/s. Thus, combining all dimensions, we have a dimensionless expression as the first term.

C_{BP,crit} is set to 1.2, meaning that if the turbulent kinetic energy k_T is 20% larger than the product of the vorticity tensor and viscosity, we get a value that is greater than zero. So, for laminar flow, we have \phi_{BP}=0, but \phi_{BP}\gt 0 for transitional and turbulent flows.

If we look again at Eq.(\ref{eq:eq:beta-bp}), we see that a zero value of \phi_{BP} will result in \beta_{BP}=0, since \exp(1)=0. If \beta_{BP} is zero, then R_{BP}, i.e. Eq.(30), must also be zero. Therefore, no transition will take place. But, if \phi_{BP} is greater than zero, the exponent in Eq.(31) will be less than 1, and so \beta_{BP}\gt 0. Thus, we will start to generate bypass transition as we have R_{BP} \gt 0.

The process for natural transition follows pretty much the same process. We have our source term for R_{NAT} defined as:

R_{NAT}=C_{R,NAT} \beta_{BP} k_L \Omega\tag{33}Here, \beta_{NAT} determines again whether natural transition occurs or not. This is defined as:

\beta_{NAT} = 1 - \exp\left(-\frac{\phi_{NAT}}{A_{NAT}}\right)\tag{34}Again, we have a quantity \phi_{NAT} that determines whether the characteristic turbulent timescale is larger than the viscous timescale to promote natural transition. This equation is given by:

\phi_{NAT} = \max\left[\left(Re\Omega - \frac{C_{NAT,crit}}{f_{NAT,crit}}\right), 0\right]\tag{35}Now we are using the Reynolds number defined by the vorticity tensor (magnitude) to judge whether turbulence production prevails. This Reynolds number is defined as:

Re_\Omega = \frac{d^2 \Omega}{\nu}\tag{36}We again have a closure coefficient C_{NAT,crit}, as well as an additional function f_{NAT,crit} that determines the threshold for the vorticity-based Reynolds number which needs to be reached for turbulence production to commence.

Thus, we have established what the two source terms R_{BP} and R_{NAT} represent and how they are computed. Once they are non-zero, turbulence production commences, and we add/subtract an equal amount within the laminar and turbulent kinetic energy equation to predict the transition from laminar to turbulent flows.

Finally, let us turn our attention to the \omega transport equation, which will also need to be slightly modified. But let us remind ourselves what the purpose is of the \omega equation. In general, when we talk about RANS turbulence modelling, we need to solve for two additional turbulent quantities to compute a length and velocity scale. This is because we need to obtain a relation for the turbulent viscosity \nu_t, which has units of m^2/s, i.e. a velocity multiplied by a length.

Unless we solve for some form of turbulent viscosity directly (like we do in the Spalart-Allmaras model), we need at least two transport equations that, combined, will yield a value for the turbulent viscosity. In the case of the turbulent kinetic energy and specific dissipation rate, we can simply combine them in the following way to compute the turbulent viscosity \nu_t:

\nu_t=\frac{k_T}{\omega}

\tag{37}

Thus, let us look at how \omega is computed. Its modified transport equation is given by:

\frac{\partial \omega}{\partial t} + (\mathbf{u}\cdot \nabla)\omega = \nabla\cdot\left[\left(\nu+\frac{\alpha_T}{\sigma_\omega}\right)\nabla \omega\right] + c_{\omega 1} \frac{\omega}{k_T} P_{k_T} - c_{\omega 2} \omega^2 + \left(\frac{C_{\omega R}}{f_W} - 1\right)\frac{\omega}{k_T}\left(R_{BP} + R_{NAT}\right) + C_{\omega 3}f_\omega \alpha_T f_W^2 \frac{\sqrt{k_T}}{d^3}

\tag{38}

We have again the time derivative, convection, and diffusion of \omega, as would be expected from any transport equation. But there are some additional terms here which are specifically added for the capturing of transition.

The first term appears on the right-hand side of Eq.(38). This term is:

\left(\frac{C_{\omega R}}{f_W} - 1\right)\frac{\omega}{k_T}\left(R_{BP} + R_{NAT}\right)\tag{39}This term becomes active once transition is taking place. For laminar flow, this term is zero, since we have R_{BP}=R_{NAT}=0. Its purpose is to increase the value of \omega in the region of transition. If we now look at Eq.(37), we can see that an increase in \omega results in a decrease of \nu_t. This is important, as we don’t want to fully switch on turbulent production when we have transition. This term limits the amount of eddy viscosity being produced.

Eventually, even if R_{BP} and R_{NAT} continue to rise, this term goes to zero as k_T increases. Thus, the role of this term is to provide reduced turbulence production in the transition region.

This term is similar to Eq.(26) in the \gamma-Re_{\theta,t},\,k-\omega SST model, where we scale the production term by the turbulent intermittency, i.e. \hat{P}_k=\gamma P_k. We do this to bring in the production of turbulence gradually. Thus, both turbulence models have a mechanism to gradually introduce turbulence into the flow, which makes sense, given that transition is a gradual transition from laminar to turbulent flows.

The second term in Eq.(38) we need to look at is given as:

C_{\omega 3}f_\omega \alpha_T f_W^2 \frac{\sqrt{k_T}}{d^3}\tag{40}Here, we have d^3, the distance from the wall, appearing in the denominator. This cubic wall distance relation is here to limit this term to the boundary layer. Its role is to provide an improved prediction of \omega in the boundary layer.

With these modifications, we have now constructed a more physically inspired model that works more like a traditional RANS model. Both the \gamma-Re_{\theta,t},\,k-\omega SST and k-k_l-\omega model are popular transition models and in use in transitional RANS predictions.

If you followed the discussion up to this point, you may ask yourself why we haven’t all switched to transitional RANS modelling. Why would anyone in their right mind continue to use classical RANS models, which, on paper, seem inferior? Well, classical RANS models have one winning advantage that transitional RANS models can’t compete with. For that, we need to talk about meshing.

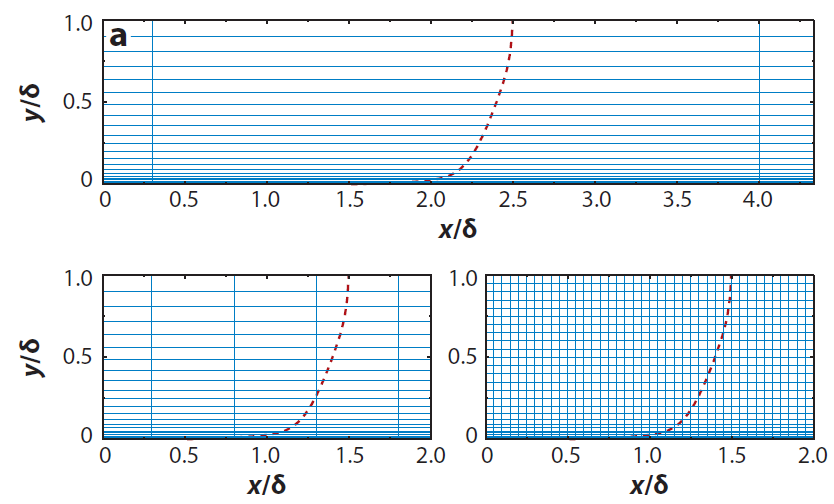

Meshing requirements for transitional RANS models

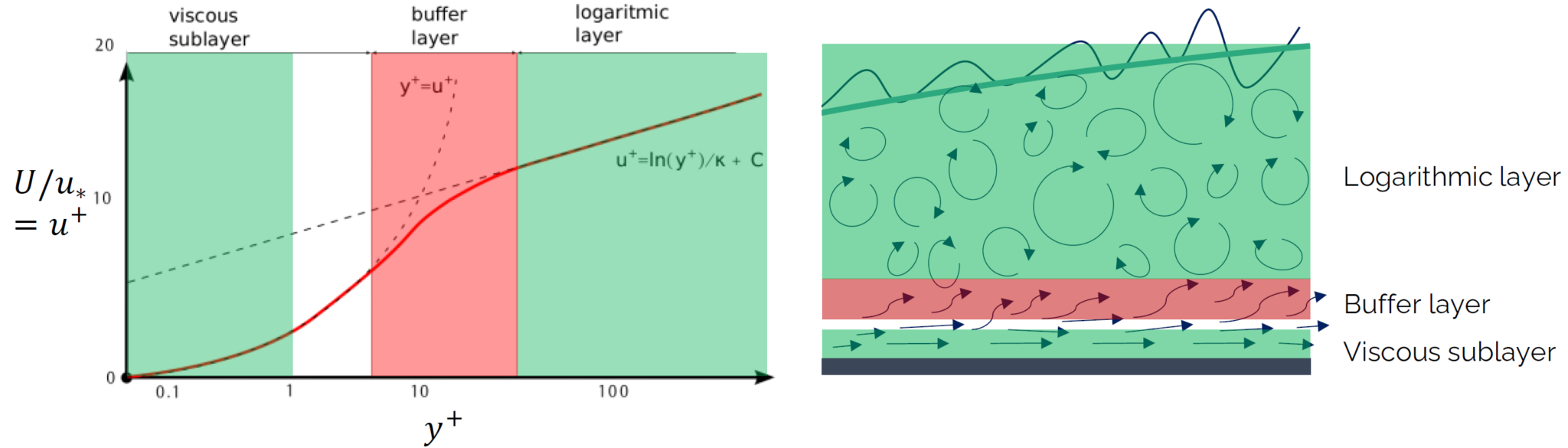

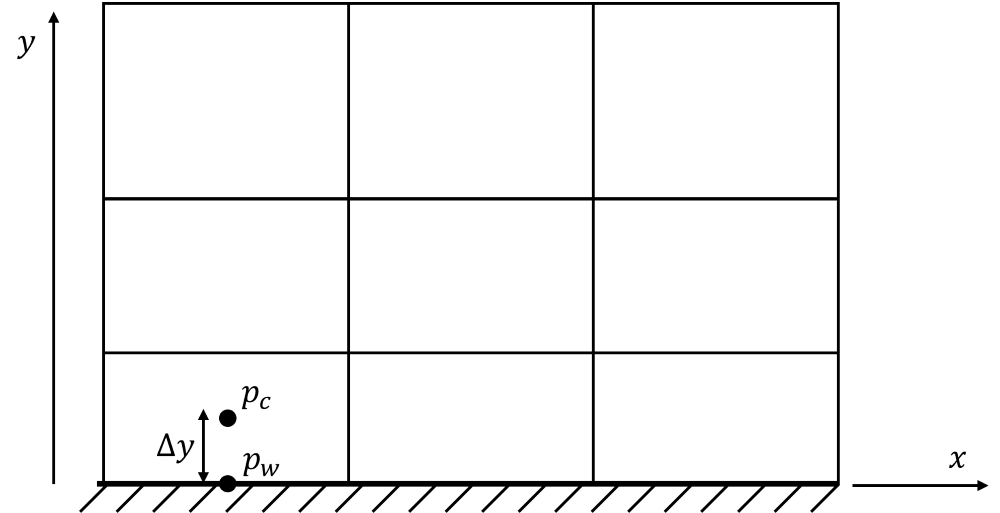

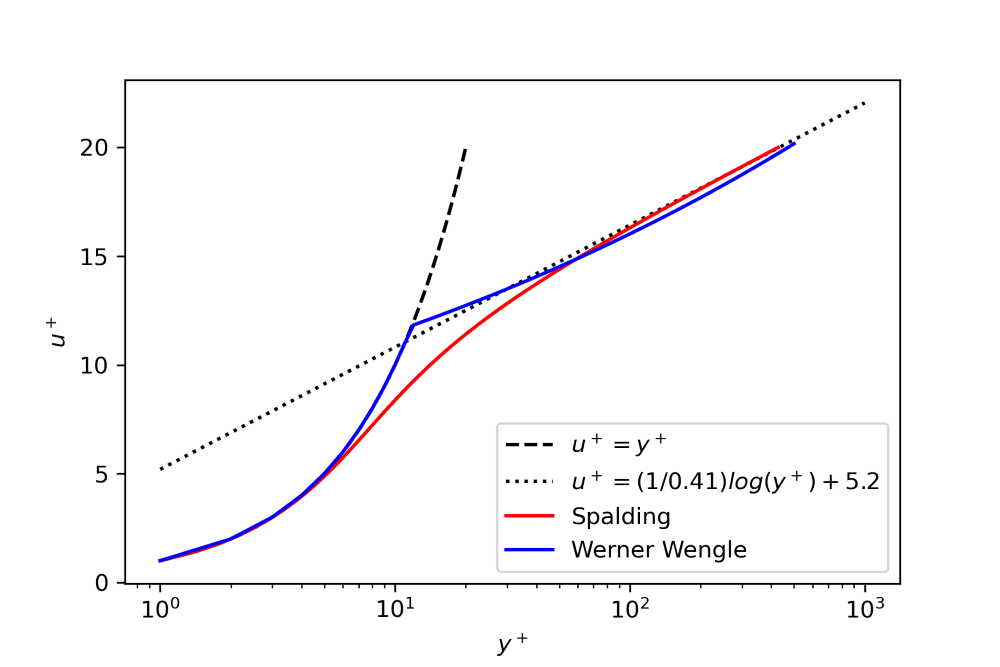

Traditional, or classical RANS models, i.e. those that assume the flow to be fully turbulent (and, as a result, cannot distinguish between laminar and turbulent flows), have pretty relaxed meshing requirements. In terms of the y^+ value, we can set almost any value we want. Nowadays, all RANS models that are implemented in commercial or widely used open-source solvers can work with either a wall-modelled or wall-resolved approach.

In the wall-resolved approach, we target a y^+ value of 1 everywhere at solid walls. This allows us to fully resolve the turbulent boundary layer. However, we can also opt for a wall-modelled approach, where the y^+ value may be somewhere between 30 to 300. It may even be larger if we do not particularly care about accurate gradients at the wall.

For transitional RNAS modelling, however, things are slightly different. The consensus in the literature seems to be that a very fine wall-resolved grid needs to be constructed. Typically, this means that we want to achieve y^+=0.1. Then, we want to grow the cells away from the wall, but with a very small expansion ratio. For classical RANS models, an expansion ratio may be set anywhere between 1.2 and 2, i.e. each cell that is layered on top of another cell, growing away from the wall, may be anywhere between 20%-100% larger than the previous cell.

For transitional RANS models, this expansion ratio is also typically set to a much stricter value of 1.05, i.e. each cell growing away from the wall will be only about 5% larger than the previous cell. This means that the computational cost for transitional RANS models is so large that they become almost unusable.

To make matters worse, if you look at LES, you have far less stringent meshing requirements (at least in the wall-normal direction), and transition is naturally captured. Granted, LES needs to be treated as an unsteady simulation, but if we were to use a transitional RANS model in unsteady mode, we may have slower performance than LES, due to an increased mesh resolution near the wall, while not getting as accurate results as LES near walls, depending on the subgrid scale model used.

For this reason, there are only very few places where transitional RANS makes sense to be used. You will unlikely find it in the wild being used for complex industrial applications, although it cannot be ruled out that transitional RANS models may provide superior results for some applications, which justify their computational cost.

Having said that, I want to leave the world of pure RANS models behind and now start looking at hybrid RANS-LES models, which have exploded in popularity since they were first introduced. Let us do just that in the next section.

When RANS isn’t enough: hybrid RANS-LES models

There is just one final item left on our to-do list, and these are hybrid RANS-LES methods. As alluded to above, these models are the latest and greatest in CFD turbulence research, and if you want to make a name for yourself in the field of CFD and turbulence modelling, this is likely the place you want to focus on.

The idea behind hybrid RANS-LES models is very simple: Use LES whenever feasible but switch to RANS if the grid requirements become prohibitively large for LES. All issues that arise in turbulence modelling are due to one single item: solid walls. Yes, a flow that has no solid walls is so trivial, that you can run a full-blown LES, possibly even a (coarse) DNS, on your microwave’s microchip (honestly these machines are getting too powerful, my microwave decided yesterday that I would have no more food unless I verified that I was the owner by entering a PIN on my mobile).

One of my PhD students did all of his LES work on a standard laptop (no fancy hardware), and those simulations would typically take about 1.5 hours to run. A DNS would be entirely feasible for the same geometry, perhaps taking a day or so. The case we were looking at was the Taylor-Green vortex problem, and this is just a box with periodic boundary conditions where a specific initial condition is allowed to freely evolve.

But, once you throw a wall into the mix, all of a sudden you’ve opened Pandora’s box, out of which the devil emerges, and all of a sudden you have to deal with this pesky little thing called a turbulent boundary layer. Now, you have to spend some serious time considering what grid resolution you should be aiming for, if that would work with your chosen turbulence modelling approach, and potentially if CFD is really right for you in the first place or if you should have done something with marketing instead.

But let’s assume you aren’t easily scared by turbulence, let’s have a look at what hybrid RANS-LES models are and which approaches have been established in the literature. Specifically, we will look at:

- Detached Eddy Simulations (DES) and derivatives (DDES, IDDES)

- Scale-adaptive Simulations (SAS)

- Wall-modelled LES (WMLES)

But first, let us do a head-to-head comparison of RANS and LES and see what their main difference is.

A head-to-head comparison between RANS and LES

In my article on RANS turbulence modelling, we looked at how we can derive the time-averaged, or Reynolds-averaged Navier-Stokes (RANS) equations. We saw that by using the Reynolds decomposition, which decomposes a flow variable \phi into its mean (\bar{\phi}) and fluctuation (\phi') component, and applying that to our momentum equation, we obtain the following time-averaged momentum equation:

\frac{\partial \bar{\mathbf{u}}}{\partial t} + (\bar{\mathbf{u}}\cdot\nabla)\bar{\mathbf{u}} = -\frac{1}{\rho}\nabla\bar{p} + \nu\nabla^2 \bar{\mathbf{u}} + \nabla\cdot\tau^{RANS}

\tag{41}

Here, \tau^{RANS} is the Reynolds stress tensor. In my article on Large Eddy Simulations (LES), on the other hand, we looked at how to derive the spatial-averaged Navier-Stokes equations, which resulted in:

\frac{\partial \bar{\mathbf{u}}}{\partial t} + (\bar{\mathbf{u}}\cdot\nabla)\bar{\mathbf{u}} = -\frac{1}{\rho}\nabla\bar{p} + \nu\nabla^2 \bar{\mathbf{u}} + \nabla\cdot\tau^{SGS}

\tag{42}

Here, the additional stresses \tau^{SGS} are the subgrid-scale stresses that are not resolved by LES. Thus, conceptually, the main difference between RANS and LES is that we take a time-averaged view of our flow field in RANS, while we retain the time history in LES, but we introduce spatial averaging so that we obtain a local averaged view of the flow field in space.

These are two very different approaches, but if we look at Eq.(41) and Eq.(42), and we pretend for a second that we do not know anything about Navier-Stokes, CFD, or fluid dynamics in general, then we would say that these equations are identical, with the difference in notation for the stress tensor, i.e. one equation uses \tau^{RANS}, the other uses \tau^{SGS}.

However, if we look at their definition, we would see that they encode different information. For RANS, we have:

\tau^{RANS} = -\overline{\mathbf{u}'\mathbf{u}'}

\tag{43}

This stress tensor contains the fluctuating velocity components that are not resolved by the flow. In LES, on the other hand, we have:

\tau^{SGS} = \left(\bar{\mathbf{u}}\bar{\mathbf{u}} - \overline{\bar{\mathbf{u}}\bar{\mathbf{u}}}\right)

+ \left(-\overline{\bar{\mathbf{u}}\mathbf{u}'} - \overline{\mathbf{u}'\bar{\mathbf{u}}}\right)

+ \left(-\overline{\mathbf{u}'\mathbf{u}'}\right)

\tag{44}

This contains the velocity components of the resolved velocity (\bar{\mathbf{u}}) and unresolved (\mathbf{u}') velocity field. In both RANS and LES, the stress tensor represents stresses we cannot resolve, i.e. in RANS it is the velocity fluctuations that we no longer resolve with our time-averaged view on the flow, while in LES it is the fluctuating velocity of eddies that are smaller than our grid spacing. Thus, we need to use some form of modelling to find approximate values for both \tau^{RANS} and \tau^{SGS}.

As it turns out, both approaches make use of Bousinesq’s eddy viscosity hypothesis. Granted, there are a few different ways to approximate the stresses, for example, in RANS we may use the Shear Stress Transport (SST) idea of Bradshaw instead which is famously used in the k-\omega SST model, but for the most part, Boussinesq’s eddy viscosity is used by most turbulence models.

So, even if we see that the exact definition for \tau^{RANS} (Eq.(43)) and \tau^{SGS} (Eq.(44)) is very different, we decide to model both terms in the same way. Thus, for RANS, we have:

\tau^{RANS} \approx -2\mu_t\mathbf{S}+\frac{2}{3}k\delta_{ij}

\tag{45}

For LES, on the other hand, we have:

\tau^{SGS} \approx -2\mu_t\mathbf{S}

\tag{46}

Now, you may be saying that these equations are not the same. However, their final form is just different. They are both based on the same eddy viscosity assumption, and the second term we can see in Eq.(45), i.e. (2/3)k\delta_{ij} is written in a different form for LES and typically absorbed into the pressure. So, it is not visible in Eq.(46), but it is accounted for in the modelling. If you want to see how, you can check my write-up on how the Navier-Stokes equations are filtered in LES.

Thus, we can see that both RANS and LES share essentially the same mathematical equations. From an exact mathematical point of view, the unresolved stresses are different. However, we most commonly model these unresolved stresses with the same closure in both RANS and LES, making both approaches essentially the same.

They are not identical, though, as we use different grid requirements in RANS and LES. This will lead to a different resolution and accuracy for both methods, but also to a different computational cost.

Thus, if both RANS and LES are so similar, can we potentially mix their description? Yes, of course, and this will lead us to hybrid RANS-LES models. The idea is to stick to LES whenever the grid resolution is good enough, but once we approach a solid wall, we simply switch to a RANS description, allowing us to use a lot fewer computational cells within the turbulent boundary layer.

If you think that the computational savings for hybrid RANS-LES is due to the reduced number of cells in the boundary layer, you would only be partially right. In fact, the biggest time saving is achieved somewhere else. Given that LES is necessarily time-dependent, i.e. we have to run transient simulations, we have to determine a suitable time step.

In CFD, we typically use the CFL number to determine a stable time step. As we saw in my article on explicit and implicit time integration techniques, the CFL number is really just a non-dimensional time step and is defined as:

CFL=\frac{u\Delta t}{\Delta x}\tag{47}Solving this for the time step \Delta t, we obtain:

\Delta t = \frac{CFL \Delta x}{u}\tag{48}If we now consider a flow that we want to simulate with both LES and a hybrid RANS-LES approach, then we can say that the velocity we compute in both cases should be the same. Thus, the velocity u will be the same. We further set the CFL number to be the same in both simulations. Since we have much stricter grid requirements in LES, we will get much smaller cells in LES close to the wall. Therefore, the value for \Delta x will be smaller in LES than for RANS, or hybrid RANS-LES, where we can use RANS grid requirements near solid walls.

We can therefore say that, in general, we have:

\Delta t^{LES} \ll \Delta t^{RANS}\tag{49}Thus, if we want to advance both solutions in time until a certain total time T, given that LES’ time step is much smaller than the time step in RANS, or hybrid RANS-LES, we have to perform fewer time steps in total to reach the final time T. Since we can only parallelise our solution in space, not in time (although this is an area that is receiving some research as well), our main bottleneck in LES is that we have to compute a lot of time steps that cannot be parallelised.

Even if we have a supercomputer available, all that it can do for us is to make the simulation time per time step as small as possible, but we still have to perform more time steps compared to pure RANS or hybrid RANS-LES to reach T. Therefore, hybrid RANS-LES is actually gaining most of its computational advantage not by reducing the mesh size, but rather by reducing the number of time steps required to advance a solution in time.

However, this discussion only considers the CFL number. For example, if we have an explicit time stepping, then we are limited to a CFL number of one, typically. In this case, hybrid RANS-LES will perform better than LES alone. If we use a fully implicit solver, we could bump up the CFL number for LES to get somewhat faster computation in time, at the cost of smearing the solution in time.

However, there are cases where the numerics are not the limiting factor. For example, let’s say we deal with combustion processes and we want to model the formation of CO_2. For combustion, we need to take the chemistry into account, and typically, chemical reactions happen at a time scale which is much lower than what our CFL number is giving us. We could express that as:

\Delta t^{chemistry} \ll \Delta t^{LES}(CFL=1) \ll \Delta t^{RANS}(CFL=1) \tag{50}Thus, in this case, we would have to run both the hybrid RANS-LES and the pure LES simulation at the same time step, as this is now governed by the chemical reactions. In this case, there is no computational advantage of using hybrid RANS-LES over LES. In fact, if we only deal with the combustion process, and not the entire geometry of a combustion chamber, for example, we may not even have any solid walls to resolve. In this case, we would typically stick to LES.

Various ways have been formulated in the literature for hybrid RANS-LES models, and we will look at the three most commonly used methods. Let us start with arguably the most common and most popular method: Detached Eddy Simulations and its variants.

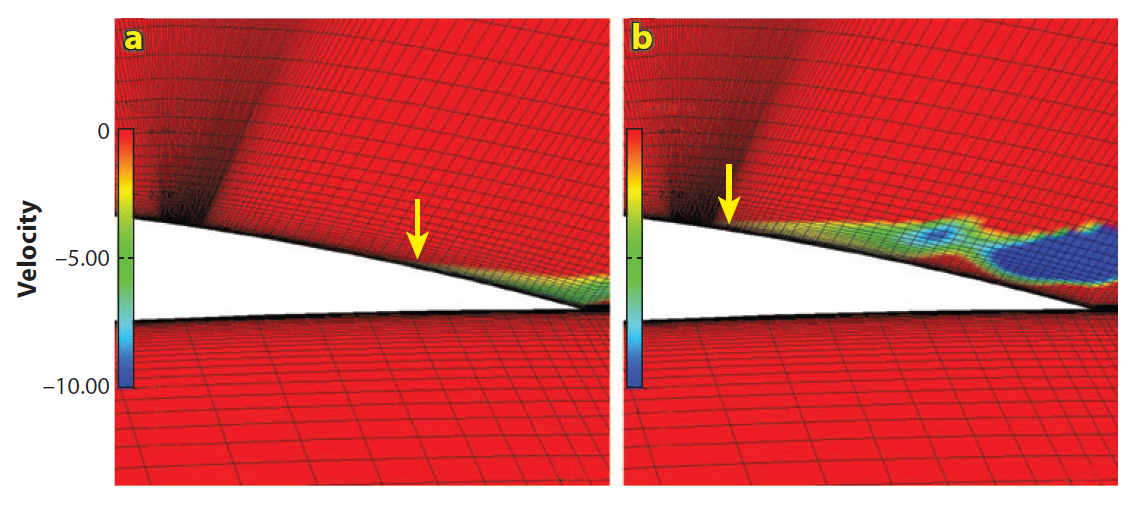

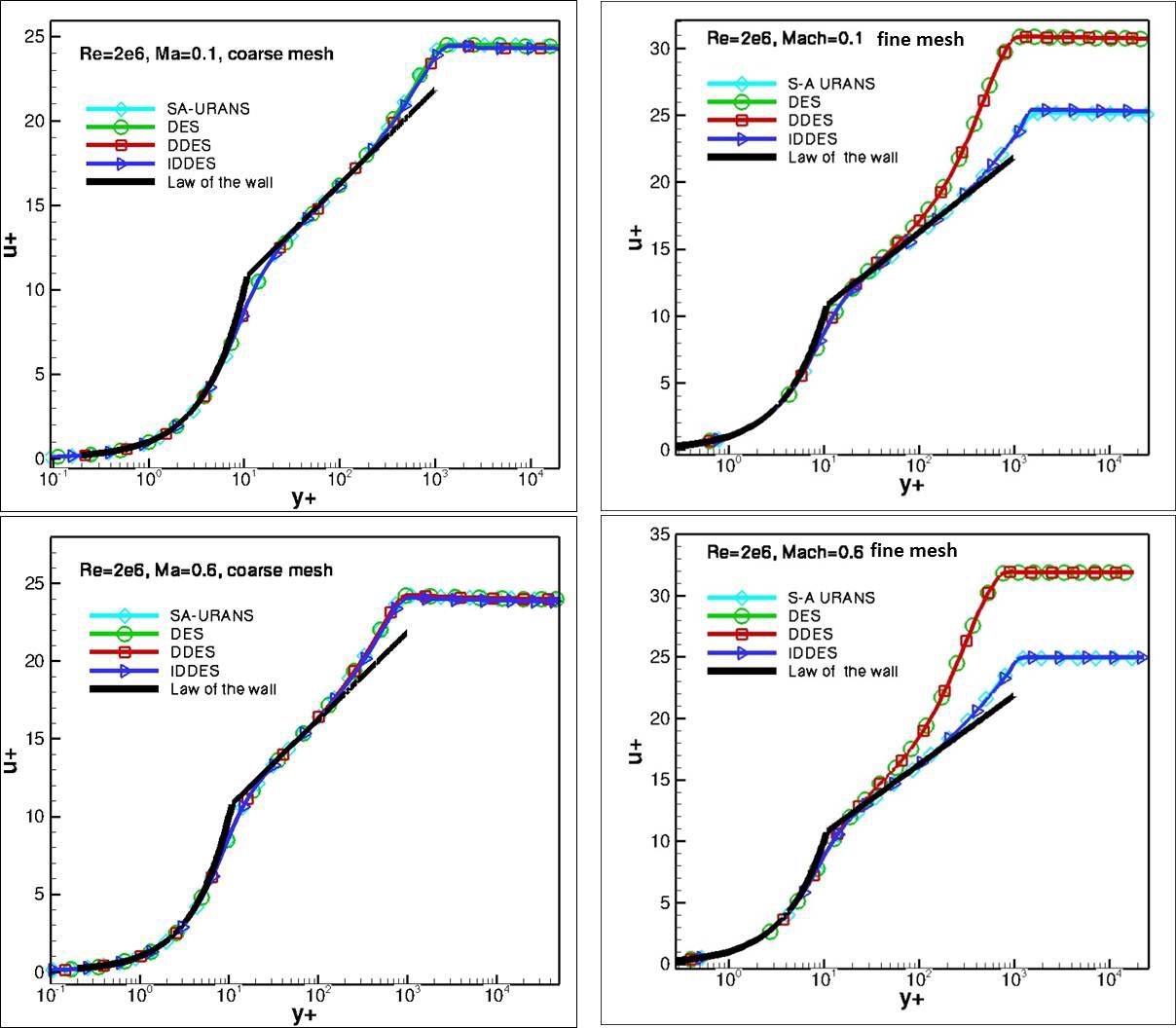

Detached-eddy simulations (DES)

Detached Eddy Simulations, or DES, was introduced by Spalart in 1997, so it has been around for quite some time. The main idea behind DES, as a hybrid RANS-LES approach, is that we solve the RANS equations everywhere in the flow, but we change the definition for the turbulence length scale computation locally based on where we are in the flow.

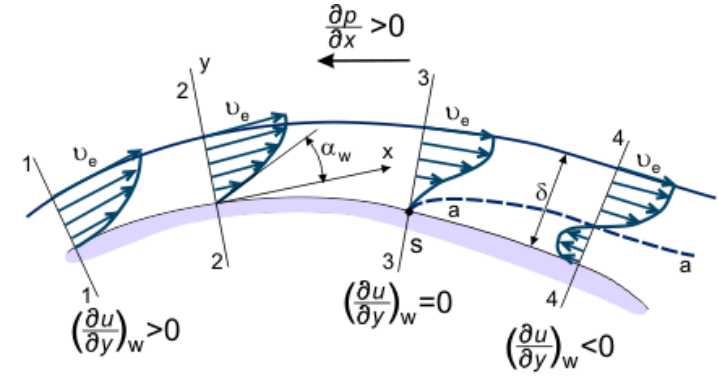

Within the turbulent boundary layer, we compute the turbulent length scale as we would in a classical RANS model, and then, in the farfield, the turbulent length scale is computed as in LES in the farfield. This is shown schematically in the following figure:

Thus, all we need to do is to find a suitable RANS model and modify its turbulent length scale. If you were Spalart in 1997, having just introduced your celebrated Spalart-Allmaras turbulence model a few years earlier, which turbulence model would you choose? Of course, your own! Thus, the very first DES model which was introduced was the Spalart-Allmaras DES model, or SA-DES model. Let us review the transport equation for the Spalart-Allmaras model again. It is given as:

\frac{\partial \tilde{\nu}}{\partial t} + (\mathbf{u}\cdot\nabla)\tilde{\nu} = \frac{1}{\sigma}\nabla\cdot[(\nu+\tilde{\nu})\nabla\tilde{\nu}] + \frac{c_{b2}}{\sigma}\nabla\tilde{\nu}\cdot\nabla\tilde{\nu} + c_{b1}(1-f_{t2})\tilde{S}\tilde{\nu} + f_{f2}\frac{c_{b1}}{\kappa^2}\left(\frac{\tilde{\nu}}{d}\right)^2-c_{w1}f_w\left(\frac{\tilde{\nu}}{d}\right)^2

\tag{80}

Here, we see that both the fourth and fifth term on the right depend on the variable 1/d, where d is the distance to the closest solid wall. These terms were introduced by Spalart and Allmaras to model the effect of solid walls; that is, close to the wall, turbulent eddies are elongated due to the presence of the wall. These terms supply additional correction to the computation of the turbulent viscosity \nu_t, which is directly proportional to \tilde{\nu}, as computed by the Spalart-Allmaras model.

However, as we move into the farfield, the fourth and fifth terms become very small and are effectively removed. This makes sense; the turbulent eddies are no longer deformed by any solid wall. However, it also means that away from walls the destruction terms for \tilde{\nu} essentially go to zero (fourth and fifth term on the right-hand side of Eq.(80)). The production term, the third term on the right-hand side, is therefore not balanced.

This works for RANS, as our grid is typically much coarser than LES, as we only want to resolve time-averaged flow properties. Therefore, \tilde{S}, which is used in the production term on the right side, will be fairly constant (time-averaged). If there are no mean flow gradients (which is typically the case for flows far away from solid walls), then there is no production as \tilde{S}=0, as \tilde{S} itself is based on the vorticity tensor \Omega, which is constructed from local mean floew velocity gradients.

Thus, in the farfield, the Spalart-Allmaras model is somewhat self-regulating. While no dissipation is present, the production term itself will go to zero.

So far, the theory, but now let’s think about what happens in reality. Let’s imagine the flow through an aircraft engine, mounted under a wing. The wing itself will produce a turbulent wake, and the engine will create a jet, which interacts with the wake. If we use the Spalart-Allmaras model here, then we are saying that far away from the wall, we will have no destruction. Jet, we are dealing with wakes and jets, which exist far downstream of the flow.

The production term will be very much active, as the wake and jet produce mean flow velocity gradients, and so the vorticity tensor will contain non-zero values. This, in turn, will increase the production of \tilde{\nu}. An increase of \tilde{\nu} will increase \nu_t. The effect of \nu_t is often combined with the laminar viscosity to form an effective viscosity

\nu_{eff}=\nu + \nu_t

\tag{52}

Thus, if the production of \tilde{\nu} is not matched by any destruction mechanism (as 1/d ensures that the destruction terms go to zero away from the wall, i.e. in the wake and jet), then we will create an increasing value of \nu_{eff}. In the Navier-Stokes equation, we use this effective kinematic viscosity instead of the laminar viscosity to scale our diffusion term; that is, instead of writing \nu\nabla^2\mathbf{u} for the diffusion, we now have \nu_{eff}\nabla^2\mathbf{u}.

Thus, as \nu_{eff} is increased, so is the diffusion. The diffusion operator works by smoothing out any gradients that are present. Thus, mean flow gradients will be smoothed (damped) to a point where we have reached a smooth flow field. This will effectively dampen any turbulent activities, especially fluctuations (regardless of whether these fluctuations are physical or not).

So, what is the solution? We want to ensure that the destruction terms do not fully go to zero in the farfield. If we can ensure that the destruction terms remain switched on, even in the wake, then we effectively balance production anywhere in the flow; this will limit the production of \tilde{\nu} (and thus \nu_t), which, in turn, will not excessively dampen turbulent fluctuations.

If we achieve this, then we can use our Spalart-Allmaras model within the LES region, which now effectively acts like a subgrid-scale model. So we don’t use any of the existing subgrid-scale models, but instead, a modified description of the Spalart-Allmaras model. So how do we compute this new length scale?

Well, we define two separate length scales. On the RANS grid, we saw that our length scale is given by

l^{RANS}=d

\tag{53}

This is the distance from the wall that we saw appear in the fourth and fifth term in Eq.(80). For LES, however, we link our length scale to the grid resolution, similar to how the Smagorinsky model defines its length scale. If you have no idea what I am talking about, I have derived the Smagorinsky model, where you can see in detail how its length scale is constructed. We say that the length scale in LES is given by:

l^{LES}=C_{LES}\Delta

\tag{54}

Here, \Delta is a representative grid spacing. If we work on a structured grid, we may take it as \Delta = \max(\Delta_x, \Delta_y, \Delta_z). On an unstructured grid, we may compute the cube-root of the cell’s volume \Delta_i=\sqrt[3]{V_i}. C_{LES} is a scaling value that approximates the turbulent eddy sizes that are no longer resolved by the grid. As a consequence, it has to be in the range of 0 \lt C_{LES} \lt 1. A value of 0.1 to 0.2 is common here.

Now we have to combine this information somehow. If we were to program this as an algorithm, we could, in somewhat C++-styled pseudo code, write the following:

double lengthScale = 0.0;

if (region == "RANS") {

lengthScale = distanceFromClosestWall;

} else if (region == "LES") {

lengthScale = std::pow(currentCellVolume, 1.0/3.0);

}Happy days, right? Well, almost. The problem with this code is that we don’t have a clear way to express whether we are in a RANS or LES region. Evaluating the if/else expression will become rather difficult.

But, this is a common issue in turbulence modelling, i.e. we want to differentiate between laminar and turbulence, boundary layers and farfield, or, as in this case, between two different regions where different modelling approaches are to be used. Whenever we are faced with this issue, we just compute both variables (in this case, the length scale for both the RANS and LES region) and, depending on what we are trying to achieve, pick either the largest or smallest value of the two.

If you look through this article alone, we used the \max and \min operators a few times, and we do exactly the same here. Let’s think about which one is most suitable. Close to the wall, we will have small values for d. If our grid is stretched in the wall parallel direction, then the cube root, i.e. \sqrt[3]{V_i} of the Cell at index i will be larger than the height over the solid wall, i.e. d. In the farfield, d will continuously grow and be far larger than the cube root of the local cell size.

Therefore, in order to achieve RANS-like resolution in the boundary layer and LES-like resolution in the farfield, we need the RANS length scale to be used within the boundary layer and the LES length scale in the farfield. This is achieved using the \min function, and this can be expressed as:

l=\min[l^{RANS}, l^{LES}]

\tag{56}

Here, l^{RANS} and l^{LES} are given by Eq.(53) and Eq.(54), respectively. However, in the context of DES, Eq.(54) is typically modified to:

l^{DES} = C_{DES}\Psi\Delta

\tag{56}

Here, \Delta in Eq.(56) is computed in exactly the same way as in Eq.(54). The additional function \Psi was introduced by Spalart to make this approach work with the Spalart-Allmaras model; however, for other RANS models, this variable is typically set to \Psi=1 and so does not require further attention (see it as a Spalart-Allmaras correction only).

For example, another popular DES model is based on Menter’s k-\omega SST model. In this model, the turbulent kinetic energy equation is given as: